Foundation models like ChatGPT are transforming society with their remarkable capabilities, serious risks, rapid deployment, unprecedented adoption, and unending controversy. Simultaneously, the European Union (EU) is finalizing its AI Act as the world’s first comprehensive regulation to govern AI, and just yesterday the European Parliament adopted a draft of the Act by a vote of 499 in favor, 28 against, and 93 abstentions. The Act includes explicit obligations for foundation model providers like OpenAI and Google.

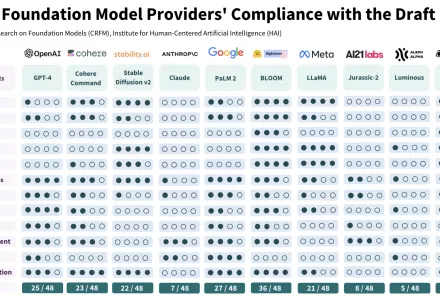

In this post, we evaluate whether major foundation model providers currently comply with these draft requirements and find that they largely do not. Foundation model providers rarely disclose adequate information regarding the data, compute, and deployment of their models as well as the key characteristics of the models themselves. In particular, foundation model providers generally do not comply with draft requirements to describe the use of copyrighted training data, the hardware used and emissions produced in training, and how they evaluate and test models. As a result, we recommend that policymakers prioritize transparency, informed by the AI Act’s requirements. Our assessment demonstrates that it is currently feasible for foundation model providers to comply with the AI Act, and that disclosure related to foundation models’ development, use, and performance would improve transparency in the entire ecosystem.

Motivation

Foundation models are at the center of global discourse on AI: the emerging technological paradigm has concrete and growing impact on the economy, policy, and society. In parallel, the EU AI Act is the most important regulatory initiative on AI in the world today. The Act will not only impose requirements for AI in the EU, a population of 450 million people, but also set precedent for AI regulation around the world (the Brussels effect). Policymakers across the globe are already drawing inspiration from the AI Act, and multinational companies may change their global practices to maintain a single AI development process. How we regulate foundation models will structure the broader digital supply chain and shape the technology’s societal impact.

Our assessment establishes the facts about the status quo and motivates future intervention.

- Status quo. What is the current conduct of foundation model providers? And, as a result, how will the EU AI Act (if enacted, obeyed, and enforced) change the status quo? We specifically focus on requirements where providers fall short at present.

- Future intervention. For EU policymakers, where is the AI Act underspecified and where is it insufficient with respect to foundation models? For global policymakers, how should their priorities change based on our findings? And for foundation model providers, how should their business practices evolve to be more responsible? Overall, our research underscores that transparency should be the first priority to hold foundation model providers accountable.

Rishi Bommasani, Kevin Klyman, Daniel Zhang, Percy Liang, "Do Foundation Model Providers Comply with the Draft EU AI Act?" Stanford Center for Research on Foundation Models, June 15, 2023.

The full text of this publication is available via Stanford University.