Data Sources, Applications, and Questions for Evaluating Ethics Risk

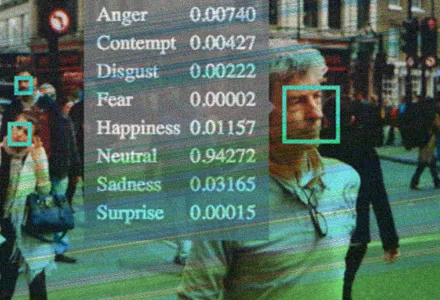

2019 seemed to mark a turning point in the deployment and public awareness of artificial intelligence designed to recognize emotions and expressions of emotion. The experimental use of AI spread across sectors and moved beyond the internet into the physical world. Stores used AI perceptions of shoppers’ moods and interest to display personalized public ads. Schools used AI to quantify student joy and engagement in the classroom. Employers used AI to evaluate job applicants’ moods and emotional reactions in automated video interviews and to monitor employees’ facial expressions in customer service positions.

It was a year notable for increasing criticism and governance of AI related to emotion and affect. A widely cited review of the literature by Barrett and colleagues questioned the underlying science for the universality of facial expressions and concluded there are insurmountable difficulties in inferring specific emotions reliably from pictures of faces. The affective computing conference ACII added its first panel on the misuses of the technology with the aim of increasing discussions within the technical community on how to improve how their research was impacting society. Surveys on public attitudes in the U.S. and the U.K. found that almost all of those polled found some current advertising and hiring uses of mood detection unacceptable. Some U.S. cities and states started to regulate private and government use of AI related to affect and emotions, including restrictions on them in some data protection legislation and face recognition moratoria. For example, the California Consumer Privacy Act (CCPA), which went into effect January 1, 2020, gives Californians the right to notification about what kinds of data a business is collecting about them and how it is being used and the right to demand that businesses delete their biometric information. Biometric information, as defined in the CCPA, includes many kinds of data that are used to make inferences about emotion or affective state, including imagery of the iris, retina, and face, voice recordings, and keystroke and gait patterns and rhythms.

Greene, Gretchen. “The Ethics of AI and Emotional Intelligence.” Partnership on AI, July 30, 2020

The full text of this publication is available via Partnership on AI.