This paper provides a brief overview of current AI-related biosecurity concerns and outlines where further analysis is still required.

“The biggest issue with AI is actually going to be … its use in biological conflict,” according to former Google CEO, Eric Schmidt.[1] And he’s not the only AI expert worried. In his testimony before the Senate Judiciary Committee Subcommittee on Privacy, Technology, and the Law, the CEO of Anthropic, Dario Amodei, warned that in just two to three years, AI has the potential to “greatly widen the range of actors with the technical capability to conduct a large-scale biological attack.”[2] OpenAI’s Sam Altman has called for regulation on AI models “that could help create novel biological agents.”[3]President Biden’s recent Executive Order on Safe, Secure and Trustworthy Development and Use of Artificial Intelligence explicitly tasks relevant agencies with assessing the ways in which AI can increase, and potentially help mitigate, biosecurity risks.[4]But what exactly has experts and officials so worried?

Biological weapons are not a new concern

The deliberate use of microorganisms like viruses or bacteria to cause disease or death[5] has a long and terrible history: Japan weaponized typhus and cholera in World War II,[6] and the Soviet Union’s bioweapon program throughout the Cold War included producing and stockpiling smallpox, anthrax, and drug-resistant plague.[7] The United States also developed its own bioweapon program in this period, including anthrax and Q-fever, until it was terminated by President Nixon in 1969.[8]

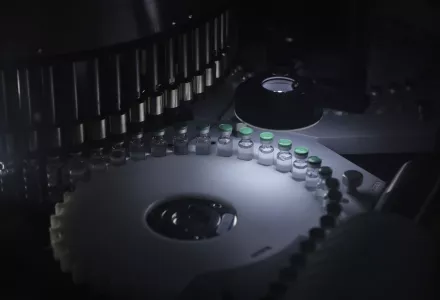

Today, the Centers for Disease Control warns that the bacteria that causes anthrax is one of the most likely agents to be used in a biological attack.[9] To date, the development, containment, and deployment of such weapons have required significant resourcing and expertise.[10] This does not mean such weapons have only been accessible to nation-states, but it has ensured that only a limited number of actors have had the capability to develop them.

Researchers are concerned AI might increase bioweapon knowhow

AI experts are concerned that highly capable AI models could assist non-experts in designing, synthesizing, and using these weapons, thus expanding the pool of actors that could access these dangerous capabilities. Concerns are increasingly centered around future capabilities, rather than those of the present day.

MIT students recently demonstrated how large language model (LLM) chatbots could be used to help non-experts understand the process of manufacturing risky pathogens.[11] Within one hour, students without science backgrounds had used the chatbots to list four viruses capable of causing a pandemic, identify reverse genetics as a means to manufacture them and suggest acquisition methods that could help bypass misuse screening.[12] But in this and other experiments, such as a recent report by RAND, LLMs have not yet generated explicit, directly actionable instructions on how to create bioweapons, and it is not clear that today’s LLMs offer significant advantages over what can already be gleaned from the internet.[13]

In the future, more advanced AI capabilities may cause greater concern, as LLMs increasingly enable the synthesis and production of sophisticated and accurate insights at an expert level.[14] Even less advanced models, when focused on biological data, might give rise to biological risks. In 2022, an experiment revealed how AI used in pharmaceutical design could be tweaked to design highly toxic chemicals instead.[15]

As AI models are increasingly trained and deployed to aid research in areas like pathogens and cancers, they may have the potential to be similarly co-opted to design new and more harmful pathogens. Given the potentially catastrophic impacts of biological agents, it will be necessary for AI, biology, and broader experts to collaborate on better understanding these risks.

Biological science capabilities also shape bioweapon risks

Advances in synthetic biology are making biological development tools more readily accessible. A DIY Bacterial Gene Engineering CRISPR kit is purchasable online for as little as $85 USD,[16] and scientists regularly order custom DNA sequences from commercial labs, only some of which have committed to screening for misuse potential.[17] But it is not clear that AI-aided know-how and currently available commercial tools are sufficient for bioweapon risks to materialize.

The process of developing, storing, and deploying a novel pathogen or bioweapon is further complicated by the danger of handling such materials while evading detection presents additional challenges. Further analysis is needed on the current risk mitigation mechanisms employed by the private sector and its level of risk awareness, as well as the impact of pandemic preparation and response capabilities.

Deterrence and detection programs may need revision

If future AI and biological capabilities expand access to bioweapons, existing detection and deterrence arrangements may fall short. Some key methods of limiting biochemical weapon proliferation are aimed at nation-state actors. For example, international treaties like the Biochemical Weapons Convention aim to establish international norms against the manufacture, trade, or use of such weapons, and generate international pressure (including through complaints to the United Nations Security Council) to promote compliance. Yet this scheme would be irrelevant to many adversarial state and non-state actors, including apocalyptic groups, that may also not care about retaliation or blowback effects.[18]

New or novel biological agents may also potentially evade current detection and early warning mechanisms. The recent Executive Order takes some action on this front, tasking agencies to develop stronger standards for the screening of biological synthesis requests.[19] But further updates may be required. For example, the US Department of Homeland Security Biowatch Program, introduced in 2003 in response to the 2001 anthrax attacks,[20] operates in 30 jurisdictions across the US.[21] It monitors the air for select biological agents, such as the smallpox virus,[22] but would not necessarily be primed to pick up a new biological compound that AI might enable. Any evaluation of emerging risks should consider whether further updates to such regimes are warranted.

Conclusion

Developing a nuanced understanding of how technologies are changing biosecurity risks requires multidisciplinary expertise. The Defense, Emerging Technology, and Strategy Program at the Belfer Center will bring together experts across technology, biology, security, health, and international policy domains to grapple with these issues. Proactively understanding and addressing AI-biosecurity risks is key to mitigating catastrophic risk, as well as enabling responsible AI innovation.

The views and judgments in this article are those of the individual writers and do not represent the views or judgments of any current or former employers.

References

[1] Ryan Lovelace, “AI-Powered Biological Warfare Is ‘biggest Issue,’ Former Google Exec Eric Schmidt Warns,” Washington Times, September 12, 2022, https://www.washingtontimes.com/news/2022/sep/12/ai-powered-biological-warfare-biggest-issue-former/.

[2] “Oversight of A.I.: Principles for Regulation,” July 25, 2023, https://www.judiciary.senate.gov/imo/media/doc/2023-07-26_-_testimony_-_amodei.pdf.

[3] Justin Hendrix, “Transcript: Senate Judiciary Subcommittee Hearing on Oversight of AI,” Tech Policy Press, May 16, 2023, https://techpolicy.press/transcript-senate-judiciary-subcommittee-hearing-on-oversight-of-ai/.

[4] The White House, “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence,” October 30 2023, https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/

[5] World Health Organization, “Biological Weapons,” accessed October 19, 2023, https://www.who.int/health-topics/biological-weapons.

[6] Friedrich Frischknecht, “The History of Biological Warfare,” EMBO Reports 4, no. Suppl 1 (June 2003): S47–52, https://doi.org/10.1038/sj.embor.embor849.

[7] Frischknecht.

[8] Nuclear Threat Initiative, “United States Biological Overview,” June 4, 2015, https://www.nti.org/analysis/articles/united-states-biological/.

[9] Centers for Disease Control and Prevention, “Anthrax as a Bioterrorism Weapon | CDC,” November 18, 2020, https://www.cdc.gov/anthrax/bioterrorism/index.html.

[10] Jaime M. Yassif, Shayna Korol, and Angela Kane, “Guarding Against Catastrophic Biological Risks: Preventing State Biological Weapon Development and Use by Shaping Intentions,” Health Security 21, no. 4 (August 2023): 258–65, https://doi.org/10.1089/hs.2022.0145.

[11] Emily H. Soice et al., “Can Large Language Models Democratize Access to Dual-Use Biotechnology?” (arXiv, June 6, 2023), https://doi.org/10.48550/arXiv.2306.03809.

[12] Soice et al.

[13] Christopher A. Mouton, Caleb Lucas, and Ella Guest, “The Operational Risks of AI in Large-Scale Biological Attacks: A Red-Team Approach” (RAND Corporation, October 16, 2023), https://www.rand.org/pubs/research_reports/RRA2977-1.html.

[14] Anthropic, “Frontier Threats Red Teaming for AI Safety,” July 26, 2023, https://www.anthropic.com/index/frontier-threats-red-teaming-for-ai-safety.

[15] Fabio Urbina et al., “Dual Use of Artificial-Intelligence-Powered Drug Discovery,” Nature Machine Intelligence 4, no. 3 (March 2022): 189–91, https://doi.org/10.1038/s42256-022-00465-9.

[16] “DIY Bacterial Gene Engineering CRISPR Kit,” The ODIN, accessed October 19, 2023, https://www.the-odin.com/diy-crispr-kit/.

[17] Soice et al.

[18] Paul Cruikshank, Don Rassler, and Kristina Hummel, “A View from the CT Foxhole: Lawrence Kerr,” Combating Terrorism Center at Westpoint: CTC Sentinel, April 2022, https://ctc.westpoint.edu/a-view-from-the-ct-foxhole-lawrence-kerr-former-director-office-of-pandemics-and-emerging-threats-office-of-global-affairs-u-s-department-of-health-and-human-services/.

[19] The White House, “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence,” October 30 2023, https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/

[20] Department of Homeland Security, “Biological Threat Detection and Response Challenges Remain for BioWatch (REDACTED),” March 2, 2021, https://www.oig.dhs.gov/sites/default/files/assets/2021-03/OIG-21-22-Mar21-Redacted.pdf.

[21] Department of Homeland Security, “The BioWatch Program Factsheet,” accessed October 19, 2023, https://www.dhs.gov/publication/biowatch-program-factsheet.

[22] Department of Homeland Security, “Biological Threat Detection and Response Challenges Remain for BioWatch (REDACTED).”

Egan, Janet and Eric Rosenbach. “Biosecurity in the Age of AI: What’s the Risk?.” November 6, 2023