Exploring Data Privacy Challenges and Approaches Across Areas of Practice

Download the Full Paper

Contributors

Georgetown Ethics Lab

Jonathan Healey, Assistant Director

Sydney Luken, Designer

Mapbox

Tom Lee, Policy Lead

@tjl

Center for Open Data Enterprise

Katarina Rebello, Director of Programs

@kmrebello

Joel Gurin, President

@joelgurin

Paul Kuhne, Roundtables Program Manager

Salesforce

Marla Hay, Director of Product, Privacy and Data Governance

@marla_hay

Executive Summary

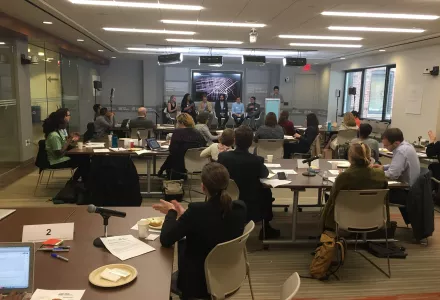

In May 2019, The Harvard Kennedy School’s Technology and Public Purpose (TAPP) Project and New America’s Public Interest Technology teams hosted a roundtable focused on data privacy in Washington, D.C. through the lens of two key questions:

- How might organizations and legislators collaborate to bridge aspirational privacy principles to product design and development?

- How might data privacy legislation be more effective to meet user needs?

This Understanding Data Privacy Protections report aims to synthesize key insights from the workshop convening and use four case studies to highlight the nuances of privacy protection through different organizations and strategies. As part of the decision to facilitate an open discussion during the workshop, we will integrate some of the discussion, questions and ideas throughout this report without attribution.

The co-led team convened a group of over 40 data privacy minded advocates, academics, researchers, political and government officials, practitioners and lawyers to exchange expertise and views on personal data privacy regulation. The group developed a broad set of engaging ideas on ways organizations who handle personal data should consider or frame data rights and consent processes and explored conversations around improvements to data privacy legislation.

The event highlighted ongoing efforts in data privacy ranging from Carnegie Mellon’s technical data privacy research in academia to Mozilla’s data privacy principles in practice through Firefox browser features. Lightning talks featured several experts who were involved in implementing some level of privacy-protecting features, policies or principles into their own organizations including: John Wilbanks, Chief Commons Officer at Sage Bionetworks, Tom Lee, Policy Lead at Mapbox, Jasmine McNealy, Attorney and Professor in the Department of Telecommunication at the University of Florida, Marla Hay, Director of Product, Privacy and Data Governance at Salesforce, Glenn Sorrentino, Principal UX Designer at Salesforce, and Gil Ruiz, Legislative Assistant at the office of Kirsten Gillibrand.

Following the lighting talks, the group broke up into small, curated discussion sessions designed to share expertise across sectors and delve into data privacy questions and concerns on: civil rights and liberties, privacy design, and potential legislation.

Key insights:

- Many participants expressed the challenge of attaining consent by informing end users of all complexities of the data capture, storage, transfer, and management. Organizations are looking to UX design patterns to test iconic representations, quizzes, and formative evaluation to improve the likelihood of understanding terms before proceeding.

- Unlike consumer focused platforms, Salesforce and Mapbox’s main users are businesses. Both are exploring models of what it means to design more effective ways of conveying privacy information through embedding privacy features and functionality in their platform. There is a need to develop privacy protections on a business-to-business framework in addition to business-to-consumer.

- Due to the quickly changing nature of technology, organizations like CODE are exploring what specific issues need updates and improvements on existing policy and legislation like HIPAA, COPPA, and FERPA. What processes might help policymakers better structure legislation to iterate these policies in a realistic and timely way?

- Companies like Mapbox that collect telemetry data have multiple strategies and approaches with their trip data to make it “useless for [potential third parties] to [track] individuals.” Through a delicate balance, they continually study ways to “data accessible to researchers, planners and customers without compromising user privacy.” Additionally, de-identification and anonymization of data are not the same.

- Privacy protections manifest in a variety of harms that are largely context dependent and can be themed by various types. For example, the privacy of one’s financial data versus their social or religious affiliations may have different harms and impacts. As policymakers and practitioners consider privacy protecting regulation and mechanisms, these terms must be specified and defined to their uses and specific harms.

- There is a large gap to bridge aspirational privacy principles (e.g. “Embed privacy from the beginning” or “Put users in control of their data”) with operationalizing those principles into practice and product development. These principles are often more stagnant than they should be iterative as technology develops.

- There are a variety of privacy-protecting metrics that companies and organizations are using such as the “degree of privacy enjoyed by users in a system [and] the amount of protection offered by privacy-enhancing technologies.” Privacy Impact Assessments, industry benchmarks through a set of standard criteria, number of data breaches, and customer satisfaction are other methods to measure privacy discussed at the IAPP’s Global Privacy Summit in March 2019.

Participants also highlighted key points of tension that often arose in their work as it relates to product and policy creation. These conversations paralleled many conceptual investigations of theoretically grounded approach of Value Sensitive Design: What values are implied through product design? How are various stakeholders impacted by that design? How might we engage in trade-offs in the implementation of features? On this note, here were some key trade-offs discussed at the event:

Key trade-offs and themes:

- User Empowerment vs. Ease of use. Empowering users may imply companies inundate users with complex tasks while user simplicity may imply a sense of paternalism and opacity.

- Personalization vs. Anonymization. Using the context of precision medicine, having precise medical data also does not mean there is a technically feasible way to be anonymous. The incentives between the genetic data collector and the user may be at direct odds. The word anonymization of data is often conflated with de-identification of data.

- Benefits and Risks to Society vs. Company vs. Individual. Using healthcare data as an example, patients often donate personal genetic data in order to learn more about ways to improve their lifestyle or prevent health related issues. Genetic data-collecting may want to collect as many points of user data as possible in order to distribute it to researchers and learn more about population level trends to identify opportunities for innovation or intervention. For profit companies may be incentivized to collect data to sell to pharmaceuticals. While both may have some overlapping interests like improving general health and wellbeing for future at-risk patients, often times the priorities are at odds.

Privacy trade-offs can correlate with conversations around benefits and costs. Researchers have conceptualized ways of understanding human needs and values like privacy in product design. An Annenberg School for Community study at the University of Pennsylvania questions the premise and outlines that “a majority of Americans are resigned to giving up their data—and that is why many appear to be engaging in trade-offs.” Americans consent to data-collection because the benefits are worth the costs when in reality, many feel resigned about the “inevitability of surveillance and power of marketers to harvest their data.” A Value Sensitive Design concept highlighted by Friedman et al. posits that humans conceive trust through analyzing the harms, the good will “others possess toward them” and whether the harms that happen “occur outside the parameters of the trust relationship.” As conversations related to policy creation, many attendees went into depth about organization values, implementation and the framework for decision making.

Some attendees highlighted potential negative impacts to framing privacy protections as binary trade-offs. In terms of product design, the challenge is often to find a balance between personalization and anonymization of the service. By using this framing, one assumption is that end users must fully lose user empowerment to achieve user ease. “What is the right balance between the two?” one attendee asked. Conversely, some appreciated having the trade-off framework to highlight where some decisions may create unintended consequences and inefficiencies elsewhere.

Several members expressed the importance of not oversimplifying privacy at the cost of speed, ease, and familiarity. There must be more education and public awareness around the nuance of privacy depending on the context, users, and challenges that may uniquely exist in a certain industry. The following case studies aim to highlight some of the similarities and differences that exist across four organizations who attended the event.

Nguyen, Stephanie, Afua Bruce and Laura Manley. “Understanding Data Privacy Protections Across Industries.” Belfer Center and New America, November 2019