2020-21 “Smart City” Cautionary Trends & 10 Calls to Action to Protect and Promote Democracy

Why Public Spaces Are Important for Democracy

The extent to which “smart city” technology is altering our sense of freedom in public spaces deserves more attention if we want a democratic future. Democracy–the rule of the people–constitutes our collective self-determination and protects us against domination and abuse. Democracy requires safe spaces, or commons, for people to organically and spontaneously convene regardless of their background or position to campaign for their causes, discuss politics, and protest. In these commons, where anyone can take a stand and be noticed is where a notion of collective good can be developed and communicated. Public spaces, like our streets, parks, and squares, have historically played a significant role in the development of democracy. We should fight to preserve the freedoms intrinsic to our public spaces because they make democracy possible.

Last summer, approximately 15 to 26 million people participated in Black Lives Matter protests after the murder of George Floyd making it the largest mass movement in U.S. history.1 In June, the San Diego Police Department obtained footage2 of Black Lives Matter protesters from “smart streetlight” cameras, sparking shock and outrage from San Diego community members. These “smart streetlights” were promoted as part of citywide efforts to become a “smart city” to help with traffic control and air quality monitoring. Despite discoverable documentation about the streetlight’s capabilities and data policies on their website, including a data-sharing agreement about how they would share data with the police, the community had no expectation that the streetlights would be surveilling protestors. After media coverage and ongoing advocacy from the Transparent and Responsible Use of Surveillance Technology San Diego (TRUSTSD) coalition,3 the City Council, set aside the funding for the streetlights4 until a surveillance technology ordinance was considered and the Mayor ordered the 3,000+ streetlight cameras off. Due to the way power was supplied to the cameras, they remained on,5 but the city reported it no longer had access to the data it collected. In November, the City Council voted unanimously in favor of a surveillance ordinance and to establish a Privacy Advisory Board.6 In May, it was revealed that the San Diego Police Department had previously (in 2017) held back materials to Congress’ House Committee on Oversight and Reform about their use facial recognition technology.7 This story, with its mission creep and mishaps, is representative of a broader set of “smart city” cautionary trends that took place in the last year. These cautionary trends call us to question if our public spaces become places where one fears punishment, how will that affect collective action and political movements?

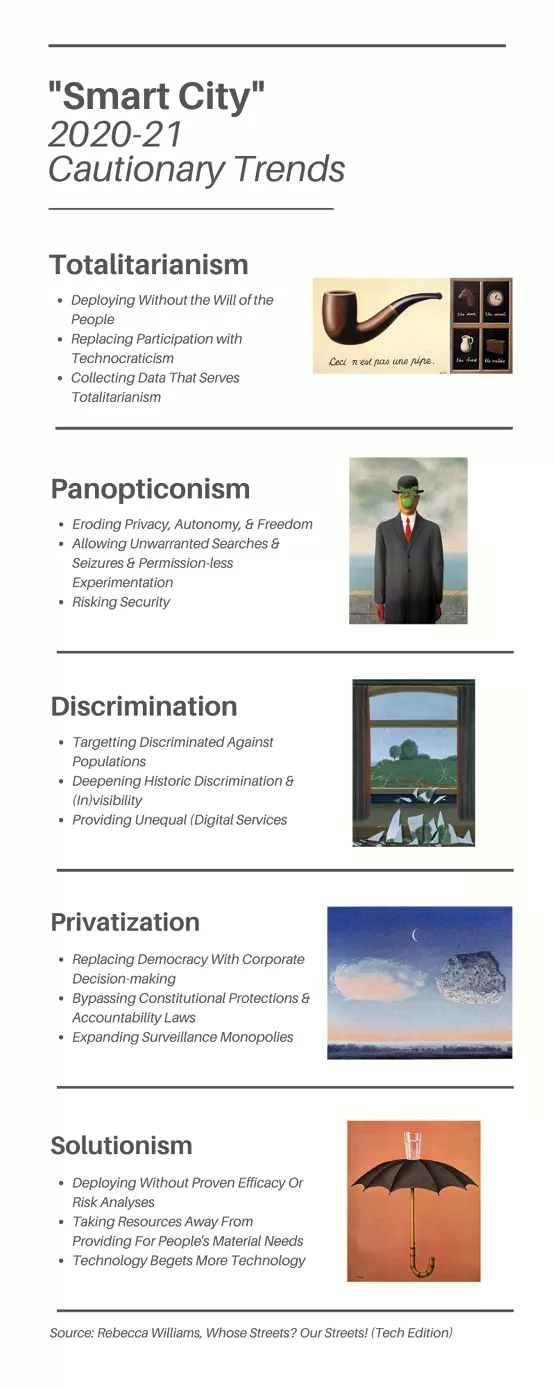

This report is an urgent warning of where we are headed if we maintain our current trajectory of augmenting our public space with trackers of all kinds. In this report, I outline how current “smart city” technologies can watch you. I argue that all “smart city” technology trends toward corporate and state surveillance and that if we don’t stop and blunt these trends now that totalitarianism, panopticonism, discrimination, privatization, and solutionism will challenge our democratic possibilities. This report examines these harms through cautionary trends supported by examples from this last year and provides 10 calls to action for advocates, legislatures, and technology companies to prevent these harms. If we act now, we can ensure the technology in our public spaces protect and promote democracy and that we do not continue down this path of an elite few tracking the many.

How A “Smart City” Watches You

“Smart city” technology surfaced as a concept more than 20 years ago8 and serves as an umbrella term for a wide range of technologies collecting and transmitting data in the city environment. For simplicity, this report will focus on “smart city” technology that is capable of collecting data that can identify individuals because that data can be used to target individuals, which in turn can erode the sense of safety and inclusivity requisite for public spaces to serve as commons for democratic functions.

Technology

Many types of technology may fall under the umbrella term of “smart city” technology. This report will focus primarily on the hardware and software associated with cameras, location trackers, and sensors. These technologies are common components in broader “smart city” technologies and projects, and they have the high-risk ability to collect data that can directly, or in combination with other data, identify individuals. Technologies all have inherent affordances, that is qualities or properties that define their possible uses or clarify how they can or should be used. These technology components all have the inherent capabilities to track individuals. Simply put, these are devices that may be watching you.

Cameras

Security cameras in public spaces first became a common practice in the 1970s and 1980s. The proliferation of these cameras, their capacity to identify individuals, and their uses by law enforcement are steadily increasing. Public Closed-Circuit Television (CCTV) cameras come in various shapes and sizes and may be attached to various government-owned fixtures such as buildings, telephone poles, and traffic lights. In addition, cameras may be inconspicuously attached to unmanned aerial vehicles (UAVs) like drones or spy planes or built into other devices like kiosks, mirrors, parking meters, poles, scooters, street lights, and traffic lights. Lastly, law enforcement may be wearing body-worn cameras. In addition to government-owned cameras, privately-owned cameras, including cameras built into cell phones, may be filming you. These cameras may have real-time biometric recognition software built into the device itself, such as facial recognition, fingerprint recognition, iris recognition, gait recognition, and tattoo recognition. These sets of software are often also referred to as “technologies.”

Location Trackers

Cell phones and various transportation-related technologies collect location data. If governments or corporations collect this data at an individual level, it can be used to identify individuals and track their every move. Cell phones can disclose your location in many ways, including mobile signal tracking from towers, mobile signal tracking from cell-site simulators, Wi-Fi, Bluetooth, and location information from applications and web browsing.9 Bikeshare, rideshare (including taxis), and scooters can collect individual trip data via GPS or their associated cell phone application and privately owned vehicles can provide telematics data or “vehicle forensics kits” to third parties. Public transit can collect individual trip data and link it to your transit card or personal financial information. Mobility data can be collected by third-party devices such as Automated License Plate Readers (ALPRs), Intelligent Transportation Systems, or cell phone location data brokers. ALPRs are commonly mounted on tow trucks, law enforcement cars, and surveillance cameras. In addition to cameras and sensors, Intelligent Transportation Systems can include induction loops, infrared, radar, sound or video imaging, or Bluetooth that collect mobility data.

Sensors

Sensors convert stimuli such as heat, light, sound, and motion into electrical signals and can be used to identify individuals’ presence or identifying qualities. Some common applications of using these sensors to watch people in cities have been the use of audio sensors (like ShotSpotter10) to detect and analyze audio signals, infrared sensors, Light Detection and Ranging (LiDAR) sensors, motion sensors (that use microwave, reflective, ultrasonic, or vibration sensing) to detect moving people and vehicles, and thermal sensors which are commonly used to detect the heat of suspects or victims by law enforcement.

Other Watching Technology

Other “smart city” technology items that are not covered in the broad three categories above that may be collecting data that can identify individuals include Information and Communications Technology (ICT) infrastructure (cell phone towers powering up to 5G11 and public Wi-Fi), Internet of Things (IoT) or GPS-system connectivity to various things such as electronic monitoring, “smart tags” and RFID Chips, online activity (of government websites, financial transactions, social media analysis), police robots (like Boston Dynamics’ digidogs12 or Knightscope’s rolling pickles13), and “smart kiosks” and USB ports.

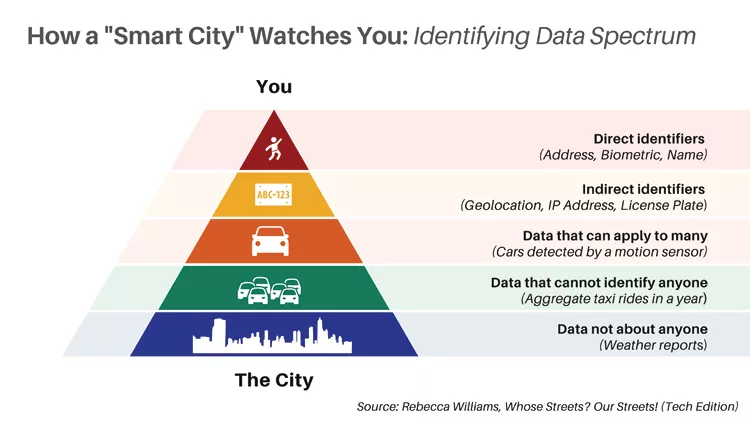

Data

What data cameras, location trackers, and sensors collect, whether or not it is being analyzed in real-time, and how it is managed throughout its life all affect its likelihood of creating risks for individuals. Identifying data exists on a spectrum.14 For example, data with “direct identifiers” such as your name, biometric, or address can readily identify you. This data type has historically been categorized as personally identifiable information (PII) and is the riskiest data for the technologies above to collect. Further along the spectrum, and the next riskiest are “indirect identifiers” that combine with other data to identify you—for example, your IP address, geolocation, or license plate number. Further still along the spectrum are data that can be ambiguously linked to multiple people—for example, cars detected by a motion detector.

This data by itself may not be able to identify you, but in certain contexts it may be able to. As more data is collected and data joining techniques become more sophisticated, so does the ability to re-identify someone within a seemingly ambiguous dataset. This ability is commonly referred to as the “mosaic effect” derived from the mosaic theory of intelligence gathering, in which “disparate pieces of information—although individually of limited utility—become significant when combined with other types of information.”15 As re-identifying risks increase, data practitioners are challenged with the trade-offs of collecting granular data, which can be of high research utility and protecting against re-identification harms.

Throughout this report, I will refer to data that can directly or indirectly or through re-identification techniques identify an individual as “identifying data.” I will argue that in the “smart city” context, identifying data collection can lead to harms that threaten democracy, so the question for communities will be, what utility is worth that trade-off? Further down the identifying data spectrum is data that cannot be linked to a specific person, such as aggregated taxi rides in a year. And lastly, on the spectrum is data that is not linked to individuals, like weather reports.

Whether or not identifying data is analyzed in real-time, as opposed to historical snapshots, affects its riskiness because it allows for real-time targeting. Lastly, beyond the collection of identifying data, how these datasets are managed throughout the entire data lifecycle (stored, accessed, analyzed, joined, etc.) can expand the ability to identify, and thus target, individuals.

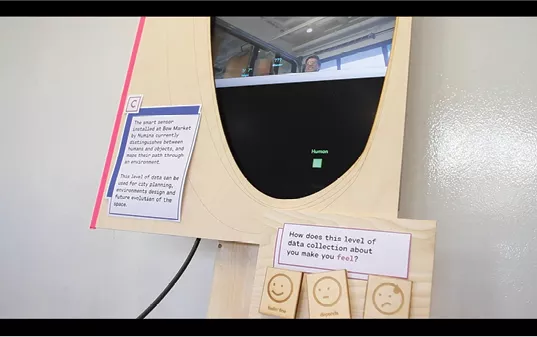

While the above technologies can collect data that can be used to identify you, it does not necessarily mean that they are or have to. For example, cameras can be replaced with motion detectors or configured with “video anonymization software” to collect blurred images. Images can also be altered after collection to better protect against re-identification with image-altering tools that blur or pixelate and strip identifying metadata or use generative adversarial network (GAN) escape detection techniques to create fake derivative images that look similar to the naked eye. (These post-collection techniques may not successfully protect against the risks of re-identification by highly skilled technologists now or in the future as traces of the original image may be detectable.) On the other hand, cameras can be augmented with facial recognition technology which can use algorithms to match a crisp image of your passing face with a known image of you. Relatively new, facial recognition technology got twenty times better at recognizing a person out of a collection of millions of photos between 2014 and 2018. In addition, the technology has become much more affordable. Until recently, it would be a safe assumption to remain anonymous in public spaces unless you ran into someone you knew. If facial recognition technology is scanning public spaces at all times, that is no longer a safe assumption16 and changes the nature of public spaces and how we will operate in them. At the farthest end of the spectrum, cameras can be augmented with real-time biometric recognition capabilities to quickly identify you and lookup related datasets about you to form a composite of who you are. These big data systems are already being used, such as in Kashgar, where a state-run defense manufacturer, China Electronics Technology Corporation, runs a high-tech surveillance system to monitor and subdue millions of Uyghurs and members of other Muslim ethnic groups.17 Examples of these developing big data systems in the U.S. include advanced analytics promising “digital twins,” predictive policing, fusion centers or real-time crime centers, and video analytics. IDEO CoLab illustrated this range of data possibilities for cameras in an interactive art exhibit. See screengrabs below.

Location trackers can range in granularity of data between individual trips and aggregate yearly trips. Personal trip data can easily be used to connect you to specific sensitive trips. For example, when a trip begins at a sensitive location such as a political protest, all the government would need to know is who lives at the house at the end of the trip to identify them and note their involvement in the demonstration. Location trackers can range in how location data is joined with other datasets. For example, cell phone applications sometimes include subcomponent software, like X-Mode,18 that can track your location across applications to create a complete dataset of your location at all times.

Sensors range in how they sense people, sometimes collecting identifying data such as biometrics, re-identifying data such as drug use in water,19 or aggregate foot traffic data while generating energy.20 While many sensors are not directly aimed at tracking individuals, it does not mean that information collected from them could not be paired with other data streams like facial recognition and artificial intelligence (AI) against an individual. Steve Bellovin, a computer science professor at Columbia University, offered the Wall Street Journal21 the following hypothetical: “might a pollution sensor detect cigarette smoke or vaping, while a Bluetooth receiver picks up the identities of nearby phones? Insurance companies might be interested.” Still, other sensors may be collecting data about the environment, such as precipitation sensors, that cannot be used to identify anyone.

Uses

While certain technologies create dangerous affordances and identifying data creates inherent risks to individuals, how this data is legally permitted to be accessed and used is of great significance to societal power structures and effects on democracy. Today, “smart city” technology, often sold under a banner of “collective goods,” is knowingly or unknowingly being repurposed by law enforcement for widespread surveillance and punitive purposes. Specific examples of this mission creep will be detailed further in the report.

To minimize the harms of this technology, some jurisdictions have begun to regulate facial recognition technology22 and surveillance technology.23 To reduce the harms that can arise from collecting identifying data, some jurisdictions have started to regulate the collection of biometrics,24 consumer data,25 and general data protection, like the European Union’s (EU) General Data Protection Regulation (GDPR), and specific other datasets. Finally, to minimize the harms of these uses, some jurisdictions have begun regulating how this data can be used after it is collected, such as banning the use of facial recognition databases like ClearviewAI in Canada26 or the sharing of personal transit data by transit agencies without a warrant in Massachusetts.27 Many of these laws regulate all three aspects of technology, data, and use to some extent. Still, I have found it helpful to think about these items distinctly because they each have different ways of creating harm and different regulatory challenges, which will become evident as we explore these harms and interventions further.

2020-21 “Smart City” Cautionary Trends

Below I have briefly outlined cautionary trends of where “smart city” technology may be headed. No section is intended to provide a comprehensive description of these concepts, nor are the examples an exhaustive account of these phenomena. Instead, this framework is intended to be a provocation and a 2020-21 highlight reel of “smart city” technology cautionary tales, with all of the cited examples taking place in the last year. While this report is primarily intended for a U.S. audience, these problems are global, and these cautionary tales come from around the world to highlight that these harms are not hypothetical but happening today. My hope for readers is to consider these possibilities, their likely ends and to build on these ideas.

Totalitarianism

Totalitarianism is a form of government that controls all aspects of its people’s public and private lives and is often described by scholars as the opposite of democracy. In The Human Condition, Hannah Arendt articulates how the public realm is requisite to pluralism and democracy itself:

This attempt to replace acting with making is manifest in the whole body of argument against “democracy,” which, the more consistently and better reasoned it is, will turn into an argument against the essentials of politics. The calamities of action all arise from the human condition of plurality, which is the condition sine qua non for that space of appearance which is the public realm. Hence the attempt to do away with this plurality is always tantamount to the abolition of the public realm itself. The most obvious salvation from the dangers of plurality is monarchy, or one-man-rule, in its many varieties, from outright tyranny of one against all to benevolent despotism and to those forms of democracy in which the many form a collective body so that the people is “many in one” and constitute themselves as a “monarch.”28

In The Origins of Totalitarianism, she goes on to describe how the destruction of the public realm fuels totalitarian rule stating, “totalitarian government, like all tyrannies, certainly could not exist without destroying the public realm of life, that is, without destroying, by isolating men, their political capacities.”29 “Smart city” technology disrupts and destroys the public realm in our cities in three ways:

- It is deployed without the will of the people;

- It replaces a participatory dialogue, “What would we like in our neighborhood?” with technocratic analysis by the state, “They will design data collection that will inform them to what they will do with our neighborhood;” and

- It is designed with identifying data collection that empowers mono-ideology, a police state, and the chilling of freedom of expression and dissent.

Deploying Without the Will of the People

A first-order question is whether the local community wants the “smart city” technology? To know the answer to this question, government representatives must facilitate ongoing engagement with community members to learn what they desire in their community and if participatory data collection is a part of their collective goals. For governments to deploy technology that can identify individuals and their activity without their will–such as affirmative consent or informed vote, or protections like warrant authorizations–should be viewed as a totalitarian act. The government should not be tracking you without a democratic discussion and decision about that.

2020-21 “Smart City” Examples:

- In Toronto, community members protested the city’s deal with Sidewalk Labs citing issues with the privatization of public space, privacy of a corporation collection data in public spaces, and accountability risks if the corporation was allowed to analyze, and display the activities, choices, and interpersonal interactions in the fine detail of the individuals who use the space. This protest exemplified that the community was not consulted before the city sought out procurement with Sidewalk Labs.30

- In Detroit, the public spoke against a $2.5 million plan to install hundreds of traffic light-mounted cameras at city intersections in a public meeting.31

- In the U.S., after technology company Apple provided the capability to opt-out of app tracking in their iPhone iOS 14.5 update, 96% of users used it,32 strongly suggesting that when given that option, people do not want to be tracked by applications on their phone.

Replacing Participation With Technocraticism

The next question is, does “smart city” technology help democratic input and governance by the people? “Smart city” technology, data, and uses currently skew top-down, with government officials and their vendors deciding what is collected and how it is used, rather than a more bottom-up approach, where people willingly participate in defining collective goals and the design of any related data collection. Further, the rhetoric of data-driven government, “Moneyball for government,” behavioral nudges, and evidence-based policy popular in U.S. government management circles rest on the premise that if the government collects enough data, they can derive operational efficiencies and save money managing government programs and services. With enough data, you can “manage” the people themselves. Technocratic analysis by governments is not new, but the vast expansion of surveilling individuals, rather than engaging in dialogue with them directly through participatory means is unprecedented. This move away from participatory dialogue needs to be corrected if we want democracy to serve community needs.

2020-21 “Smart City” Examples:

- In Birmingham, their Mayor Randall Woodfin declared, “If we don’t have witnesses to come forward, then our only other option is more [police] technology.”33

- In Lucknow, India, the police deployed facial recognition technology aimed at women’s faces claiming to use artificial intelligence (AI) to read whether or not a woman was in distress while walking about the city to alert law enforcement.34

- Globally, governments are using services like Media Sonar, Social Sentinel, and Geofeedia to analyze online conversations to try to gauge public sentiment. Even “privacy-protecting” companies like Zencity, which only offers aggregate data and forbids targeted surveillance of protestors, have been used for potentially alarming uses such as police monitoring of speech that is critical of police. When Pittsburgh City Councilor Deb Gross learned of the use of this tool (which was not disclosed to the city council before its use due to a free trial), she said, “Surveilling the public isn’t engaging the public, it’s the opposite.”35

Collecting Data That Serves Totalitarianism

Lastly, we must ask, does this “smart city” data collection support totalitarian qualities, such as mono-ideology, chilling of dissent, or a police state? An essential function of democracy is for the people to be able to debate different ideologies. In an increasingly watched world, the data collectors and managers set the narrative by what they choose to collect and what they do not. This narrative-setting quality can result in mono-culture or promote mono-ideologies. Because of this threat, the community should critically examine who collects the data and how they use it in story-telling. In the U.S., the First Amendment protects the five freedoms of speech, religion, press, assembly, and the right to petition (protest) the government. The surveillance imposed by “smart city” technology could have a chilling effect on community members feeling comfortable participating in these protected activities for fear of harassment or retaliation by law enforcement. Beyond the chilling of dissent, how does “smart city” technology fuel a police state? “Smart city” data supposedly collected for planning or efficiency purposes can be repurposed for enforcement purposes and currently without the requirement of review like a warrant. At what point does “safe city” rhetoric create an environment where you can effectively be tracked by law enforcement at all times?

2020-21 “Smart City” Examples:

- Globally surveillance of protesters has harmed people’s right to privacy and right to free assembly and association. In the United States, the unprecedented participation in Black Lives Matters protests during the summer of 202036 were met with surveillance by streetlights in San Diego, business district cameras in San Francisco,37 Ring cameras in Los Angeles,38 helicopters in Philadelphia, Minneapolis, Atlanta, and Washington,39 spy planes in Baltimore40 and Florida,41 and facial recognition technology in New York,42 Miami,43 and Pittsburgh.44 At least 270 hours of aerial footage from 15 cities was gathered on behalf of the Department of Homeland Security (DHS)45. Abroad, protestors were monitored by facial recognition technology in China,46 England,47 Hong Kong,48 India,49 Israel,50 Myanmar,51 Russia,52 Slovenia,53 South Africa,54 Uganda,55 and the United Arab Emirates.56 In addition, facial recognition technology was used by laypeople to identify the rioters who stormed the U.S. Capitol on January 6th, highlighting that these identifying tools are available to anyone and that policymakers take care to in defining provisions to protect protestors.

- Globally, law enforcement has expanded their surveillance capabilities, such as Chula Vista’s experimentation with drones powered with artificial intelligence,57 U.S. Customs and Border Protection’s plans to collect the faceprint of virtually every non-U.S. citizen and store them in a government database for 75 years,58 and the expansion of “real-time crime centers” throughout the United States.59 In Greece, police have been given devices that let them carry out facial recognition and fingerprint identification in real-time.60 As will be detailed further in this report, the scope of data law enforcement has access to without a warrant extends far beyond their tools to seemingly benign infrastructure like streetlights and scooters and to private entities.

- Throughout the U.S., governments have not disclosed this expansion to the public. Washington D.C.’s failed to publicly disclose their use of a facial recognition system until it arose in protest court documents61 and San Diego failed to disclose their use of facial recognition to Congress.62 Use of these technologies has been repeatedly lied about, such as with Baltimore’s false statements about how their mass surveillance data was used in their surveillance plane,63 Long Beach’s broken promise to not send license plate data to ICE,64 and New Orleans’ police use of facial recognition software to investigate crime despite years of assurances that they were not.65 Further, many police technology programs erected in the name of transparency have under police management failed to deliver that promise, such as Omaha and Tallahassee’s police department’s failures to disclose police bodycam footage66 and New York City’s police department’s failure to meaningfully disclose their use of surveillance technologies as required by the Public Oversight of Surveillance Technology Act.67

Panopticonism

Panopticonism, first proposed by English philosopher Jeremy Bentham in the 18th century and then criticized by French philosopher Michel Foucault in the 21st century, describes a system of control where prisoners don’t know when they are being watched, and thus act as though they are being watched at all times. As “smart city” technology increases the ability to watch people, it enables panopticonism in three ways:

- It erodes privacy, autonomy, and freedom by increasing how often you are watched;

- It allows for unwarranted searches and seizures and permission-less experimentation; and

- It risks our security, not just from surveillance by the state or the state’s corporate partners but via data breaches and foreign adversaries.

Eroding Privacy, Autonomy, & Freedom

As discussed, there are many ways “smart city” technology can create a genuine panopticon by being able to track you (via your identity) and your activity (via your movement and other data) at all times. Governments are currently expanding their cameras and identifying capabilities on the ground and in the air and obtaining mobility data without a warrant. This collection of identifying data is often done in the name of public safety. Still, there have not been many high-profile cases of this new collection bringing to bear the security promised. For example, U.S. Customs and Border Protection scanned more than 23 million people in public places with facial recognition technology in 2020 and caught zero imposters. Further, while these tools have not yet fulfilled their promise, much of the rhetoric about implementing “smart city” technology, such as creating “digital twins” of community members movement around the city for government analysis or “real-time crime centers,” implies that a panopticon is the goal of these technologies. These goals should be categorically rejected. This invasion of privacy effectively creates a loss of liberty, social detriment, and chills First Amendment rights.

2020-21 “Smart City” Examples:

- Globally, cities expanded their use of CCTV and aerial surveillance beyond unprecedented levels, such as China’s ‘Sharp Eyes’ program, which aims to surveil 100% of public space,68 Scotland’s major Edinburgh upgrade,69 and throughout India in Chhattisgarh,70 Ludhiana,71 Shimla,72 Visakhapatnam,73 and including Uttar Pradesh’s expansive automated facial recognition cameras (AFRS),74 and in Bhubaneswar where local news reported that police were “groping in dark” when a crime took place in a location with little CCTV installed.75

- Throughout the U.S., cities have expanded aerial camera surveillance, such as Baltimore’s Aerial Investigative Research (AIR) program76 and Chula Vista’s drones with artificial intelligence.77

- Globally, cities are augmenting this camera footage with identifying tools such as advanced video analytics by more than 35 law enforcement agencies in the US,78 digital profiles in Russia,79 and facial recognition technology in Buenos Aires,80 Brasilia,81 Como,82 Gurugram,83 South Orange, CA,84 and Uruguay.85 This high-risk technology has been used for civil case identification in Malaysia,86 and toilet paper enforcement in Dongguan, China.87 In addition, commercial facial recognition database tools like ClearviewAI (for law enforcement) and PimEyes (for the general public) make it possible to identify people based on images of their face without a warrant or their consent which opens up endless possibilities for abuse.

- Throughout the U.S., cities are collecting private-sector real-time mobility data via bike and scooter trip data Mobility Data Specification.88

- Throughout the U.S., many jurisdictions have begun codifying these intrusive searches, such as North Carolina’s propose Kelsey Smith Act which allows law enforcement to obtain cell phone data without a warrant in an emergency,89 and Florida’s Use of Drones by Government Agencies law,90 thereby reinforcing such activities.

Allowing Unwarranted Searches & Seizures & Permission-less Experimentation

As “smart city” technology collects more and more identifying data, the ability to learn sensitive information by searching across these databases and joining these datasets also increases. The U.S. Constitution’s Fourth Amendment provides a “right of the people to be secure in their persons, houses, papers, and effects, against unreasonable searches and seizures” by the government. New cases asserting Fourth Amendment search violations in the digital age have slowly been changing Fourth Amendment doctrine. In Carpenter v. the United States, the Supreme Court ruled that obtaining historical cell-site location information (CSLI) containing the physical locations of cell phones without a search warrant violated the Fourth Amendment. Before Carpenter, the government could obtain cell phone location records without a warrant. Since then, a series of Fourth Amendment cases have been brought to challenge the bounds of that ruling.91 Without strict limits via the Fourth Amendment or other privacy regulations, cities are experimenting with new technologies to track individuals such as police robots.

2020-21 “Smart City” Examples:

- In Leaders of Beautiful Struggle v. Baltimore Police Department, the US Court of Appeals for the 4th Circuit held92 that because the aerial surveillance program “enables police to deduce from the whole of individuals’ movements … accessing its data is a search, and its warrantless operation violates the Fourth Amendment.”93 It was only after an amicus brief revealed the AIR program was not being truthful in its surveillance capabilities94 this case was reheard.

- In Sanchez v. Los Angeles,95relying heavily on Carpenter, the American Civil Liberties Union (ACLU) and Electronic Frontier Foundation (EFF) argued that the Los Angeles Department of Transportation’s collection of bulk granular e-scooter data surmounted to an unreasonable search. The District Court dismissed the case for failure to state a claim not seeing the Carpenter connection. Since, ACLU and EFF have urged the U.S. Circuit Court of Appeals for the 9th Circuit to revive the challenge.

- In Structural Sensor Surveillance,96Andrew Ferguson examines the privacy risks of a future in which sensors are integrated and connected enough to track an individuals’ movement across a city and argues that such a world would violate our Fourth Amendment protections and suggests preventative design interventions, such as less data, and less connectivity.

- Police robots like Boston Dynamics’ “digidogs” and Knightscope’s “rolling pickles” are being tested and deployed in cities to navigate and traverse the terrain, read license plates with infrared cameras, and identify cell phones within its range down to the MAC and IP addresses.97

Risking Security

As “smart city” technology collects more identifying data, the risk of individual and collective exploits of that data increases. A data breach could lead to identity theft, causing threats to one’s safety and well-being or economic loss. Further, breachers can make collective utility grids vulnerable or governments vulnerable to hackers who may hold data hostage for ransom (also known as ransomware attacks), forcing governments to pay large sums of money to protect individual and national security.

2020-21 “Smart City” Examples:

- In 20 U.S. cities, surveyed transit agency officials found that cybersecurity concerns and protocols were inadequate across organizations of all sizes.98 Researchers at the University of California at Berkeley found that the “smart city” technologies most vulnerable to a cyberattack were emergency alert systems, street video surveillance, and some types of traffic signals. They recommended that leaders consider the significant disruption and negative consequences that would occur if these systems were hacked by bad actors and how that would affect community members.99

- Throughout the U.S., cameras, location trackers, and sensors attached to utilities have been breached during this last year. Cameras and associated biometric data have been breached by hackers inside of jails and hospitals, like Verkada’s breach of more than 150,000 surveillance cameras,100 by security bugs in and outside of people’s homes, like Eufy’s server glitch,101 and held for ransom, like when the U.S. Customs and Border Protection’s contractor Perceptics’ had their pilot biometrics of travelers’ faces held hostage on the dark web.102 Location trackers have been breached with the hacking of social media applications like Parler disclosing location data.103 Utility sensors have been breached, like the Colonial pipeline’s ransomware attack,104 the Tampa-area water utility hack,105 and the potential hacking of “smart intersections.”106Identifying data has been hacked and held for ransom including that of 22 police officers in D.C.107 and an undisclosed amount of individuals’ data in multiple cities during the pandemic.108

- Globally, i addition to cyberattacks by independent hackers, cybersecurity breaches of “smart city” technology have been linked to foreign adversaries. The lawful use of “smart city” technology provided by foreign companies has come under scrutiny for its ties to foreign espionage, such as several China-based technology companies with Chinese military ties109 such as New York City’s subway cameras.110

Discrimination

Discrimination is when a person is unable to enjoy their human rights on an equal basis with others because of an unjustified distinction made in policy, law, or treatment based on their race, ethnicity, nationality, class, caste, religion, belief, sex, gender, language, sexual orientation, gender identity, sex characteristics, age, health or another status.111 In Dark Matters: On the Surveillance of Blackness, Simone Browne, traces back the over surveillance and “hypervisibility” of Black people to the “lantern laws” of the 17th and 18th century which required slaves to carry a lantern or candle if they were walking at night without a white owner to make them more visible and trackable. “Smart city” technology continues this trend of discriminatory surveillancein three ways:

- It targets historically discriminated against populations; and

- It deepens historical discriminatory practices already present in public administration and it makes certain groups more or less visible than others; and

- It provides (digital) services to some groups of people and not to others.

Targeting Discriminated Against Populations

As “smart city” technology continues to collect identifying data, including data related to gender, race, and religious affiliation, stewards of this data can target ethnic and religious minorities and other marginalized and oppressed communities for monitoring, analyzing, enforcement, imprisonment, torture, and in worst-case scenarios, genocide.

2020-21 “Smart City” Examples:

- In Xinjiang, China, the government has reportedly been forcing more than 1 million ethnic minority Uyghur people into labor camps. To support these efforts, China law enforcement are surveilling the Uyghur population with facial recognition technology that is designed to identify Uyghur individuals,112 attempting to detect their emotions,113 and surveilling their movements with the Integrated Joint Operations Platform which tracks their movement by monitoring the “trajectory” and location data of their phones, ID cards, and vehicles.114 Technology companies such as Alibaba,115 Dahua,116 Huawei, Intel, Megvii, and Nvidia117 were all scrutinized for their part in aiding this detection and surveillance.

- In the U.S., location and timestamp data from Muslim Prayer cell phone applications (Salaat First and Muslim Pro) via their embedded Software Development Kits (Telescope and X-Mode) managed by data brokers like Predico were procured by federal immigration law enforcement.118

- In Russia, the technology companies AxxonSoft, NtechLab, Tevian, and VisionLabs offer ethnicity analytics as part of the facial recognitional technology offerings to the Russian government, raising concerns by researchers that the country’s law enforcement can track minorities.119

- In Lesbos, Greece, a range of experimental, rudimentary, and low-cost “smart city” technologies (drones, facial recognition, and LiDAR) were tested on refugees in camps first.120

- In Lucknow, India, the decision to surveil women (rather than perpetrators) for signs of street harassment via facial recognition technology perpetuates patriarchal controls that limit the choices of women, and results in an intrusion of privacy and autonomy of women in public spaces, which is essential for women to exercise their freedom and political action fully. Further, emotion recognition has continually been proven ineffective across genders.121

Deepening Historic Discrimination & (In)visibility

In addition to aiding the targeting of particular groups of people, “smart city” technology can exacerbate current discriminatory practices by reinforcing the spatial inequities rife in urban planning, racial inequity in police technology122, and extending those inequities to digital worlds, which is often referred to as “digital redlining.”123 These technologies can replicate inequity, exacerbate inequitable harms, mask inequity, transfer inequity, and compromise inequity oversight.124 The result of facial recognition technology (which reads dark-skin and women’s faces less accurately) being placed in predominantly Black and Latino neighborhoods results in compounded bias. Potential harms that flow from disproportionate use or disparate impact include loss of opportunities, economic loss, and social determinants.125

2020-21 “Smart City” Examples:

- In Argentina,126 Brazil,127 Detroit,128 and Woodbridge,129 facial recognition technology misidentifications have led to the wrongful arrests of innocent individuals resulting in distress, lost wages, the undermining of people’s rights to due process and freedom of movement. The National Institute of Standards and Technology have found high rates of false positives in matching photos of Asians, African Americans, and Native groups,130 and researchers Joy Buolamwini and Timnit Gebru found an error rate of less than 1 percent for light-skinned men but at least 20 percent for dark-skinned women.131 Facial recognition technology’s accuracy is continually improving132 with new training data, including the ability to identify people with masks on,133 which could help solve this harm, but in turn, only strengthen the discriminatory targeting examples above.

- In Chicago, 13-year-old Adam Toledo, was shot by the police after being alerted to the scene by a ShotSpotter, a gunfire detection system. Here, the system created an unacceptable risk of officers responding to perceived threats in Black and Latino neighborhoods with deadly force, which would have not otherwise occurred.134 Throughout the U.S., ShotSpotter is disproportionately deployed in Black neighborhoods.135

- Throughout the U.S., cities such as Baltimore and D.C.136 have installed over two-and-a-half times more CCTV cameras in majority Black neighborhoods than in majority nonwhite areas. Cities with an above U.S.-average Black population are twice as likely to have a Police-Ring partnership surveilling your community.137

- Throughout the U.S., various cities have used “smart city” technology to surveil and enforce the poor. In Tampa, the city has installed CCTV outside of public housing units and Detroit has come under scrutiny by local activists for using facial recognition technology in public housing,138 spurring the introduction of Federal legislation139 to prohibit “the use of biometric recognition technology in certain federally assisted dwelling units.” In parts of Florida, Louisiana, and Nevada, and throughout Oklahoma, roadway surveillance systems featuring ALPRs have been installed to bill uninsured drivers.140

- In Los Angeles, the act of looking up your information in predictive policing systems via ALPRs may increase your criminal risk score.141

Providing Unequal (Digital) Services

By contrast, there are instances where access to digital goods, including internet access,142 have not been equally provided to historically marginalized communities.

2020-21 “Smart City” Examples:

- In New York City, digital Wi-Fi kiosks were promoted as “a critical step toward a more equal, open, and connected city,” by Mayor Bill de Blasio, but unevenly clustered in Manhattan and in its bordering neighborhoods where people did not need these services, but where the company behind LinkNYC, CityBridge, which relies on advertising revenue, to sell ads, with more impoverished areas having few or no kiosks.143

- In Thailand, residents in the deep south, primarily home to the Malay Muslim population, began reporting targeted mobile network shutdowns after residents were required to re-register their SIM cards through a new facial recognition system.144

Privatization

Privatization is the transfer of a public commodity or service to private ownership and control. For example, many companies offering “smart city” technology effectively privatize government infrastructure or services–either through government procurement or public-private partnership agreements–and often the land the infrastructure occupies. In addition to replacing public services, technology companies are also displacing public services, such as how the heavily venture-backed and unregulated Uber and Lyft rideshare services have shifted public transit use to rideshare services. These examples also collect proprietary data, which is often exclusively controlled by the technology companies, creating another, and perhaps the most powerful layer of privatization, the privatization of knowledge. “Smart city” technology ushers toward privatization in three ways:

- It replaces democracy with corporate decision-making; and

- It bypasses constitutional protections and accountability laws; and

- It expands surveillance monopolies.

Replacing Democracy With Corporate Decision-making

The privatization of city services by corporations replaces democratic decision-making with corporate profit-driven decision-making. By owning “the digital layer,” companies can decide what information is collected and how it is used. When this data informs service delivery, it effectively controls how public services are delivered rather than voting, representation, or other democratic means. This phenomenon is particularly insidious for “smart city” technologies that offer “black box” AI as part of their toolset, where not only do they control the decision-making process, but it is not visible to community members. Lastly, these proprietary data systems can create lock-in and dependency on technology companies making it harder for governments to move away from this ownership and control.145

2020-21 “Smart City” Examples:

- In Nevada, Governor Steve Sisolak has proposed the legislature consider “Innovation Zones” that would create autonomous districts for private developers that own more than 50,000 acres of land (such as Blockchain, LLC) to take over responsibilities for tax collection, K-12 education, and other services. The proposal would allow companies to test out “revolutionary projects” and, in exchange, promise to invest up to $1 billion in the Zone and agree to an “innovative technology” tax.146

- In Toronto, Sidewalk Labs proposed147 to collect data on energy, parking, telecommunication, transportation, and waste management with the intent of establishing markets for how these assets are accessed, priced,148 and used until walking away from the project citing a weakened real estate market.

- Throughout the U.S., technology companies are affecting democratic decision-making directly by lobbying representatives, such as ClearviewAI lobbying against biometrics regulations,149 various technology companies lobbying against state privacy laws,150 and various data brokers151 lobbying against data regulation bills like the Artificial Intelligence in Government Act,152Artificial Intelligence Initiative Act,153Data Accountability and Trust Act,154Data Broker Accountability and Transparency Act,155 and the Information Transparency & Personal Data Control Act.156

Bypassing Constitutional Protections & Accountability Laws

Many of our rights and accountability mechanisms disappear when “smart city” technology projects privatize public infrastructure and services. For example, the Fourth Amendment privacy protections of the US Constitution discussed earlier often do not apply to private-sector data collection due to the third-party doctrine. In many cases, this private sector data collection (usually attached to a transaction) is much more granular than would be allowable under privacy regulations like Health Insurance Portability and Accountability Act (HIPAA) or by an Institutional Review Board (IRB), which protects the rights and welfare of human research subjects. Also, where these companies are also purchasing land (Sidewalk Toronto, Amazon HQ, Under Armor’s Port Covington project), these lands are converted to private ownership and are no longer protected by the First Amendment, which can prevent protest. Despite taking on quasi-government functions,157 technology companies are not currently required to comply with the accountability measures built into government functions such as public records access, public audits, or consequences for elected officials if services do not meet community members’ expectations.

2020-21 “Smart City” Examples:

- Throughout the U.S., governments have acquired private security camera footage (through programs like Amazon’s Ring program158) and facial recognition services (through services like ClearviewAI) without a warrant. Further, government employees may be using these services without the knowledge of government leadership or legislative approval. BuzzFeed News showcased this with audits of police department use of ClearviewAI,159 and again with five federal law enforcement agencies use of ClearviewAI that was not discovered by the Government Accountability Office’s audit.160

- Throughout the U.S., governments have acquired location data via cell phones (through application software X-Mode and Telescope161 and data brokers like Acxiom, CoreLogic, and Epsilon that provide a wide range of consumer data162), vehicles (through services like Flock’s TALON ALPR program163 and iVe,164 Otonomo,165 The Ulysses Group’s166 vehicle data programs), and scooters (through data sharing agreements like the Mobility Data Specification167) without a warrant.

- Throughout the U.S., governments have acquired utility records (through services like Thomas Reuters CLEAR168) from private-sector data brokers without a warrant.

Expanding Surveillance Monopolies

As technology companies expand their “smart city” offerings, this creates risks that users will be tracked across multiple systems. As large technology companies with ample stores of personal data and their affiliates become more engaged with “smart city” projects, this becomes more worrisome. At what point does a single corporation have “vertical integration” (in terms of identifying data) of a whole neighborhood? If a single technology company captures government technology markets, it will also effectively control the design, access, and availability of many different kinds of surveillance technologies. For example, a company that achieves platform dominance in policing would not only reap economic benefits, but would also gain enormous power over functions essential to issues of democratic policing.”169

2020-21 “Smart City” Examples:

- In Chongqing, BIG and Terminus discussed plans for the “smart city” project named Cloud Valley aimed to collect data via sensors and devices to “help people live more comfortably by monitoring their habits and anticipating their needs” and explicitly striving to achieve enough personal data so that when customers walked into a bar, the bartender would know their favorite drink.170

- Throughout the U.S., large technology companies are becoming more engaged with “smart city” projects, including Google’s affiliates involvement with CityBridge in New York City, Sidewalk Labs in Toronto, and Replica in Oregon and Amazon’s Ring partnership with law enforcement, and their recent launch of Amazon Sidewalk, a mesh network for Amazon products expanding IoT connectivity.

Solutionism

Technological “solutionism,” coined by Evgeny Morozov in 2014, refers to the phenomenon of trying to reframe political, moral, and irresolvable problems as solvable by quantifying, tracking, or gamifying behavior with technology. In, The Smart Enough City, Ben Green builds on this concept describing the “tech goggles” perspective as:

At their core, tech goggles are grounded in two beliefs: first, that technology provides neutral and optimal solutions to social problems, and second, that technology is the primary mechanism of social change. Obscuring all barriers stemming from social and political dynamics, they cause whoever wears them to perceive every ailment of urban life as a technology problem and to selectively diagnose only issues that technology can solve. People wearing tech goggles thus perceive urban challenges related to topics such as civic engagement, urban design, and criminal justice as being the result of inefficiencies that technology can ameliorate, and they believe that the solution to every issue is to become “smart”—internet-connected, data-driven, and informed by algorithms—all in the name of efficiency and convenience. Seeing technology as the primary variable that can or should be altered, technophiles overlook other goals, such as reforming policy and shifting political power. The fundamental problem with tech goggles is that neat solutions to complex social issues are rarely, if ever, possible. The urban designers Horst Rittel and Melvin Webber describe urban social issues as “wicked problems,” so complex and devoid of value-free, true-false answers that “it makes no sense to talk about optimal solutions.171

“Smart city” technology is and reinforces solutionism in three ways:

- It is deployed with little or no evidence that it is effective at solving the problem at hand and no examination of what risk it creates; and

- It takes budgets away from providing material goods and services to community members to collect more data instead; and

- It creates the need for more technology and thus more solutionism.

Deploying Without Proven Efficacy Or Risk Analyses

“Smart city” technology is steeped in solutionism, and its rhetoric and promotional materials are often couched in the promise of what it could solve rather than what it has demonstrably solved in similar instances. “Smart city” sales pitches argue that with more data collection, processes can inherently be made more efficient and thus solved. These claims don’t take into consideration failures in models or theories of change or the many externalities that impact a city.

2020-21 “Smart City” Examples:

- In Mexico City, their extensive C5 CCTV video surveillance system has failed to meet the promise of crime prevention in several ways, including corrupt police using the footage as leverage rather than encroachment, it does not deter violent crimes, and while it may have an effect on larceny, it disproportionately affects the poor while not capturing white-collar crimes.172

- Technology companies themselves have struggled to hone in a business model with Cisco–one of the first companies to use the “smart city” term–announcing in 2020 that it would stop providing its Kinetic for Cities software platform because “cash-strapped” cities were opting for solutions for “specific use cases.”173

Taking Resources Away From Providing for People’s Material Needs

Procuring technology and data collection systems is expensive and costly to obtain, manage, secure, and upgrade. While data can help you identify problems you were unaware of or hone in on efficiencies, these efficiencies can be dwarfed by the costs to collect more data. For example, with better traffic data collection, cities would still need policy interventions like congestion pricing or infrastructure interventions like new thruways or medians to reduce congestion or accidents; the data only helps to outline the problem, which the community is likely already aware of.

2020-21 “Smart City” Examples:

- In Utah, the state spent $20.7 million dollars on a five-year contract with Banjo, an AI surveillance company that promised to analyze traffic cameras, CCTV cameras, social media, 911 emergency systems, and location data in real-time and to create a “solution for homelessness” and to detect “opioid events” using its Live Time software that review by a state auditor was found not to have any AI capabilities.174

- Globally, during the COVID-19 pandemic, many cities invested in surveillance technologies such as heat mapping, face-mask detection, and artificial intelligence to monitor the capacity of its light rail system in New Jersey,175 AI cameras in Peachtree Corners,176 CCTV to detect crowds in the UK,177 contact-tracing applications in many countries.178 Thorough out the U.S., law enforcement agencies used Federal Coronavirus Aid, Relief, and Economic Security Act (CARES) Act grants to purchase encrypted radios, body cameras, and communications upgrades.179 These surveillance technologies were prioritized while non-surveillance technology solutions such as testing, masks, and financial assistance were under-resourced in these same communities.

Technology Begets More Technology

Finally, the use of “smart city” technology encourages and in fact requires the use of more technology. For example, many cities are investing in the infrastructure needed for 5G upgrades currently and being sold technologies to run off such a network. Similarly, cities procure one piece of technology and end up being offered add-ons, plug-ins, or enterprise services that can seem like a deal, but lock in cities to specific vendors via proprietary data and software investment and create an environment where the city must stay with that vendor because transferring vendors would result in high-costs of data transformation.

2020-21 “Smart City” Examples:

- In Oklahoma, companies like Rekor One are converting regular cameras into ALPRs.180

- In San Diego, their smart streetlights program (which came under scrutiny for filming protestors) had issues with over a million dollars in overrun costs due to a lack of oversight and separate fees for LED lighting and for the sensors to collect data and shoot video. 181

10 Calls to Action to Protect & Promote Democracy

While the last year has provided many cautionary trends, it has also begun to outline paths forward to prevent these harms, and more importantly, ways to think differently about how technology influences and affects our broader political goals. To protect and promote democracy, I believe we must issue regulations that immediately blunt the ability to execute these cautionary trend harms but also build capacity for evaluating how technology affects society, and fortify our democratic spaces with technology in mind.

To do so, we will need digital advocates to integrate into more substantive political advocacy movements that relate to the material needs of communities, legislatures to move beyond oversight and restore eroding rights and create new rights, and technology companies to rely on business models that do not create these harmful data markets and risks.

Below I outline 10 calls to action to protect and promote democracy based on current intervention strategies being deployed and leading theory in this space. While the cautionary trends included global examples, these calls to action are outlined with the current U.S. legal framework and regulations in mind. In addition to current intervention examples to build from, I have included resources and communities to build with. My hope is like the cautionary trends section of this report, is that these calls to action serve as a time capsule of the current policy options in 2020-21, but that these options expand and change through these communities and further global examples.

Stop Harmful “Smart City” Technology, Data, and Uses

1. Strictly Limit Law Enforcement Access to Identifying Data

To stop harmful “smart city” technology, data, and uses, we must prohibit the current mission creep of “smart city” technologies being available for dragnet searches by police without strict limits. To do this, we must examine how our current legal frameworks can be reinforced to consider these new risks, and where those frameworks are insufficient how we can fill those gaps with policies and practices that properly address these new risks.

Current interventions that should be expanded to limit law enforcement access to identifying data include Fourth Amendment litigation and scholarship, regulation, whistle-blowing, audits by third parties, and corporate transparency reports. To meaningfully preserve the privacy protections formerly available under the Fourth Amendment, advocates and legal scholars must continue to articulate how tracking technologies create the ability to conduct dragnet searches akin to cell-phones in Carpenter and, with the degree of government and private-sector entanglement currently present in society, narrow the third-party doctrine exception to meet that reality. Outcomes like the recent Leaders of Beautiful Struggle v. Baltimore Police Department holding182 are encouraging examples of how new data collection technology capabilities have changed what is acceptable under past tactics. Advocates should bring all cases in violation of unreasonable searches with a warrant, but be especially mindful of opportunities to litigate the unwarranted tracking of individuals via cameras, location trackers (from phones to vehicles), and sensors because of their widespread application. Beyond Fourth Amendment litigation, legislatures must begin regulating the use of the privacy invasive tools by law enforcement, such as surveillance technology oversight laws and data sharing regulations Massachusetts’ recent law183 that prevents transit authorities from disclosing personal information related to individuals’ transit system use for non-transit purposes. The recent introduction of the Fourth Amendment is Not for Sale Act, which requires the government to get a court order to compel data brokers to disclose data,184 and the Cell-Site Simulator Warrant Act, which establishes a probable cause warrant requirement for federal, state, and local law enforcement agencies to use a cell-site simulator185 are also encouraging. Digital advocates and investigative journalists must continue to investigate how law enforcement is obtaining identifying data from companies and using surveillance technology unbeknownst to the public and legislative representatives. Projects like BuzzFeed News’ Surveillance Nation that showcase which law enforcement agencies have used ClearviewAI and The Policing Project’s evaluation of the Baltimore spy plane are critical facts needed to effectively develop policy that will prevent these harms. Similarly, technology companies should produce transparency reports of when law enforcement have requested identifying data from them as Amazon Ring has begun to do.

To support interventions like these in your community, discuss the examples listed above with your local community groups and local representatives. To consider more deeply about how surveillance technology and capitalism enable wholesale criminalization, check out Action Center on Race and the Economy’s 21st Century Policing: The RISE and REACH of Surveillance Technology186 and STOP LAPD Spying Coalition’s The Algorithmic Ecology: An Abolitionist Tool for Organizing Against Algorithms.187

2. End High-tech Profiling

To stop harmful “smart city” technology, data, and uses, we must also ensure that identifying data is not used to track, sort, or otherwise endanger certain groups of people. This includes explicit tracking of individuals of certain groups, such as being done with the Uyghur population in Xinjiang for their “correction” or with women in Lucknow for their “protection” and the implicit tracking of certain types of individuals by tracking Muslim regions or self-reinforcing predictive-policing searches. To be clear, there are many scenarios where the collection of demographic information can be in service to justice, such as when it proves unequal treatment, but unconsented continual tracking in our public streets without people’s consent is not one of them given the documented risks. Further, governments should be obligated to continually evaluate whether these technologies have a discriminatory effect.

Current interventions that should be expanded to end high-tech profiling include indictments and sanctions for human rights violations, regulation, equity impact assessments, responsible data practices, audits, and corporate refusal to sell to governments for these purposes. Amnesty International has articulated facial recognition technology’s human rights violations and called for it to be banned.188 The Paris Judicial Court’s Crimes Against Humanity and War Crimes unit has indicted senior executives at Nexa Technology for the company’s sale of surveillance software over the last decade led to authoritarian regimes in Libya and Egypt that resulted in the torture and disappearance of dissidents and other human rights abuses189 and the U.S. Department of Commerce sanctioned 14 Chinese technology companies over links to human rights abuses against Uyghur Muslims in Xinjiang, including DeepGlin who is backed by a top Silicon Valley investment firm.190 Legislatures like Washington’s have included anti-discriminatory measures in their proposed People’s Privacy Act protecting those who fail to opt-in191 and Seattle’s surveillance technology law requires Equity Impact Assessments be conducted for all surveillance technologies as part of their oversight requirements.192 The calls for the EU to ban the use of AI in facial recognition technology that detects gender or sexuality or credit scores have also been encouraging. Public scrutiny and campaigns by civil rights organizations193 have successfully influenced companies like Amazon, IBM, and Microsoft194 to put a moratorium on facial recognition technology to governments, Huawei to backtrack a patent application they filed for a facial recognition system meant to identify Uyghur people195 and technology companies like Alibaba196 to disavow the use of their technology for targeting of ethnic groups.

To support interventions like these in your community, discuss the examples listed above with your local community groups and local representatives. To consider more deeply how these tools can be expanded, check out the Civil Rights, Privacy and Technology Table, led by a coalition of civil and digital rights advocacy groups,197 Laura Moy’s A Taxonomy of Police Technology’s Racial Inequity Problems article that features equity impact assessment application strategies,198 Data 4 Black Lives’ call for #NoMoreDataWeapons199 and the Urban Institute’s Five Ethical Risks to Consider before Filling Missing Race and Ethnicity Data200 and Creating Equitable Technology Programs A Guide for Cities.201

3. Minimize the Collection & Use of Identifying Data Everywhere

To stop harmful “smart city” technology, data, and uses, we must specifically protect people from being monitored and targeted, which means we must collectively think more carefully about how to minimize the creation of identifying data that can be abused. In addition to strictly limiting access to identifying data by law enforcement and ending high-tech profiling, we must also reduce the attack surface of potential abuses by law enforcement, corporations, and nefarious actors, by minimizing the collection of identifying data everywhere, full stop. To address this, we must explore policies that consider minimizing the creation, storage, and standardization of identifying data and regulate its use. Given the entanglement of private-sector and government surveillance, including governments growing dependence on data brokers,202 to be successful, these policies must consider both the public and private sectors’ roles in creating, managing, and using identifying data.

Current interventions that should be expanded to minimize the collection and use of identifying data everywhere include data privacy regulations, audits of misuses, demonstrations of security and other risks, and the use of methods that collect less harmful identifying data. In terms of data regulation, legislatures have begun regulating identifying technology, identifying data, and specific uses of the data, including:

- Technology: Legislatures have begun to regulate the government’s use of facial recognition technology in 20 cities and counties, including Boston, Cambridge, Easthampton, Jackson, King County, WA, Madison, Minneapolis, New Orleans, Portland, ME, and Portland OR, Santa Cruz, and Teaneck, during this past year.203 The calls for the banning of government use of facial recognition technology have steadily increased with a more significant push by 70 grassroots groups in June 2021 for Congress to pass a nationwide prohibition on biometric surveillance.204 Congress has recently introduced the Facial Recognition and Biometric Technology Moratorium Act of 2021,205 and the House Judicial Committee recently held a hearing on Facial Recognition Technology: Examining Its Use by Law Enforcement. Further, New York City and Oregon have regulated the use of facial recognition technology by private entities. Local legislatures have also begun to regulate ALPRs,206 and surveillance technology broadly.207 Congress has introduced legislation to require a warrant with the Cell Site Simulator Warrant Act.208

- Data: In terms of regulating the collection of certain types of identifying data, legislatures have begun regulating the collection of biometrics data,209 such as Portland, OR210and New York City,211 and consumer data privacy,212 such as Virginia, and Colorado213 this year. Congress has proposed regulation of data brokers with The Fourth Amendment is Not For Sale Act,214 data privacy broadly with the Data Protection Act215 and passed legislation that calls for the development of security standards for all IoT devices with the IoT Cybersecurity Improvement Act.216

- Uses: Lastly, legislatures have regulated the use of identifying data by prohibiting the use of facial recognition databases217 or the secondary use of identifying data as with Massachusetts’ transit data.218

Some highlights of these regulations that should be expanded include the requirement of third-party review in their surveillance technology regulations,219 and governments expanding their commitment to evaluate data rights issues with full-time staff and governance bodies dedicated to these issues such as Privacy Officials, Commissions, and Advisory Bodies.

Absent new regulation, there has been consumer protection litigation related to the use of facial recognition technology, such as when the Federal Trade Commission filed a complaint that Everalbum had deceived consumers about the use of facial recognition technology and their retention of images of users who had deactivated their accounts,220 protective privacy policies221 and standard contractual clauses related to data rights, such as those offered by Johns Hopkins’ Center for Government Excellence to ensure data is retained by governments as open data222 and those adopted by the EU to govern exchanges and international transfers of personal data.223 There have also been calls for minimizing data-sharing agreements across government agencies, such as when the Electronic Privacy Information Center (EPIC) urged a comprehensive review of DHS’s Information Sharing Access Agreements.224

In addition to refusing to sell identifying tools to police, technology companies are developing new methods and strategies to reduce the amount of identifying data that is created. Technology companies are creating tools to minimize the identifying capacity of images such as image altering tools (like Fawkes, which image cloaks by subtly changing pixels, or Everest Pipkin, which strips images of identifying metadata, or Anonymizer, CycleGAN, and Deep Privacy, which use GAN escape detection to create fake derivative images that look similar to the naked eye), camera applications (like Anonymous Camera, which blurs and pixelates images and strips images of identifying metadata), and video anonymization software (like Brighter.ai or FaceBlur).225 Technology companies such as Apple are using the power of their App store approval to provide a new AppTrackingTransparency feature that allows users to opt-out of tracking by applications on their phone.226 While technology tools can aid in data minimization, technology companies are still beholden to profit incentives, as exhibited by Apple’s decision to abandon encryption technology, digital keys, and data maintenance to Chinese state employees.227

Advocates have also minimized data collection through obfuscation techniques such as computer vision dazzle makeup techniques that confound facial recognition technology, disabled phone tracking, concealed messages through stenography, disappearing messages, and encrypted messaging applications.228 These obfuscation techniques should be used as demonstrative campaigns–not long-term policy solutions–and used with caution as they may be penetrable229 and cause more suspicion and surveillance by police.

To support interventions like this in your community, you can join campaigns to regulate identifying technologies and data, like facial recognition technology, organized by your local ACLU chapter230 or EFF’s Electronic Frontier Alliance231 or join global campaigns hosted by Amnesty International and Access Now. To track recent data privacy regulations can check out JD Supra’s U.S. Biometric Laws & Pending Legislation Tracker,232 National Conference of State Legislature’s 2020 Consumer Data Privacy Legislation round up.233 To protect your identifying data, you can check out resources like EFF’sSurveillance Self-Defense Playlist: Getting to Know Your Phone.234

4. Provide Meaningful Redress for Those Harmed

Finally, To stop harmful “smart city” technology, data, and uses, we must ensure there are consequences for using identifying data beyond our politically negotiated standards and that those consequences provide proportional redress for those harmed. It is not enough to pass data regulations if they are not enforced. Further, and especially important at this time, with many data regulation gaps, we must ensure that those who are harmed have swift and proportional channels of justice. The status quo of providing limited causes of action for those misidentified, no redress for those overly surveilled, and nominal damages to victims of data breaches is not enough.

Current interventions for data regulation enforcement that should be expanded include the human rights injunctions and sanctions mentioned above, fines for violations (like the GDPR235), and the cancelation of contracts with violating companies, public sector employee discipline and criminal penalties, private rights of action for those harmed, and suppression of evidence inappropriately obtained that are available under some regulations. These enforcement provisions are bolstered by whistleblower protections to encourage folks to come forward with knowledge of such misuses. Legislatures should be mindful of scoping what constitutes a misuse of identifying data and penalize it appropriately (for example, Van Buren v. the United States,236recently found that misuse of databases that one was otherwise authorized to use would not violate the Computer Fraud and Abuse Act, which comes with steep criminal penalties). In addition to penalties for misuse, individuals must have recourse when their identifying data has been misused against them. Private rights of action under surveillance technology laws are available in Berkeley, Cambridge, Davis, Grand Rapids, Lawrence, Oakland, San Francisco, Santa Clara, Seattle, and Somerville, and suppression remedies are available in Lawrence and Somerville.237 And the IL Biometric Information Privacy Act,238 which has provoked a series of lawsuits, including a recent $650 million Facebook settlement, is an encouraging example of redress.

To support interventions like these in your community, discuss the examples listed above with your local community groups and local representatives. To think more deeply about how regulation can provide those harmed with justice, check out analyses of local privacy laws like Berkeley Law’s Samuelson Law, Technology, and Policy Clinic’s Local Surveillance Oversight Ordinances239 that analyze available remedies and their implementation track record.

Build Our Collective Capacity to Evaluate How Technology Impacts Democracy

5. Mandating Transparency & Legibility for Public Technology & Data

To build our collective capacity to evaluate how technology impacts democracy, it is imperative we understand what our governments are doing in the first place. It is crucial to democracy to hold our government representatives accountable. This means to not only be aware of what “smart city” technologies are augmenting our neighborhoods but to firmly understand what data they are collecting and what the implications of that collection are.

Current interventions for mandating transparency and legibility for public technology and data that should be expanded include government transparency regulations and practices, campaigns to watch the watchers, third-party audits, and corporate transparency reports. Legislatures have called for transparency-related “smart city” technology with surveillance technology laws and practices such as providing discoverable documentation the procurement via the Open Contracting Partnership240 and by documenting the call for such tools, such as Boston’s New Urban Mechanics’ Beta Blocks program, which posted a broad “Smart City” Request for Information (RFI)241 in 2017 and publicly posted the 100+ responses online.242 Beyond broadcasting proposal responses and procurement activity, governments should provide context and facilitate feedback loops related to novel technologies as Amsterdam,243 and Helsinki244 have done for AI; and Seattle245 has done for “surveillance technologies.”