Featured in the Belfer Center Spring 2021 Newsletter »

AIs are becoming hackers. They're able to find exploitable vulnerabilities in software code. They're still not very good at it, but they'll get better. It's the kind of problem that lends itself to modern machine learning techniques: an enormous amount of input data, pattern matching, and goals that permit reinforcement. We have every reason to believe that AIs will continue to get better at this task, and will soon surpass humans. They'll even come up with hacks that we humans would judge creative.

Software isn't the only code that could be hacked by AIs. The tax code consists of rules — algorithms — with inputs and outputs. Humans already hack the tax code. We call what they find "loopholes," and their exploits "tax avoidance strategies." And we call the hackers "tax accounts" or "tax attorneys."

There's no reason to think that an AI can't be trained on the country's — or the world's — tax laws, government opinions, and court rulings. Or on the laws and rules of financial markets. Or of legislative processes, or elections. And when they are, what sorts of novel hacks will they discover? And how will that affect our society?

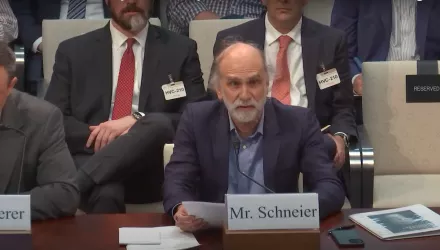

I just published an essay with the Belfer Center's Cybersecurity Project and the Council for the Responsible Use of AI that explores this question. In "The Coming AI Hackers," I generalize the notion of hacking to include these broader social systems, and then look at how AIs are hacking us humans and then how they might hack human systems of governance. Along the way, I discuss Google's Go-playing AI, robot dogs, King Midas, and genies—be very careful how you word your desires—and Deep Thought's answer to "the ultimate question to life, the universe, and everything."

AI vulnerability finding can benefit the defender. A software company could employ these tools to secure its own code before release. We can imagine a time when software vulnerabilities are a thing of the past. But the transition period will be dangerous: all the legacy code will be vulnerable, and patching it will be difficult or impossible.

We can also imagine the same trajectory for other systems. New tax laws could be tested for loopholes. It doesn't mean they'll be patched, of course, but they will become part of the debate. But here, again, I worry about the transition period, when newly capable AIs are turned loose on our legacy rules. We don't have governance structures that can deal with an AI who finds hundreds of loopholes in our tax laws, or in the regulations that govern financial markets. That level of abuse will overwhelm the underlying systems.

How realistic any of this is depends a lot on the details, and I explore that in the essay. The summary is that while this is still science fiction, it's not stupid science fiction. And we should start thinking about the implications of AI hackers now, before they become reality.

"Exploring a World of AI Hackers." Belfer Center Newsletter, Belfer Center for Science and International Affairs, Harvard Kennedy School. (Spring 2021)